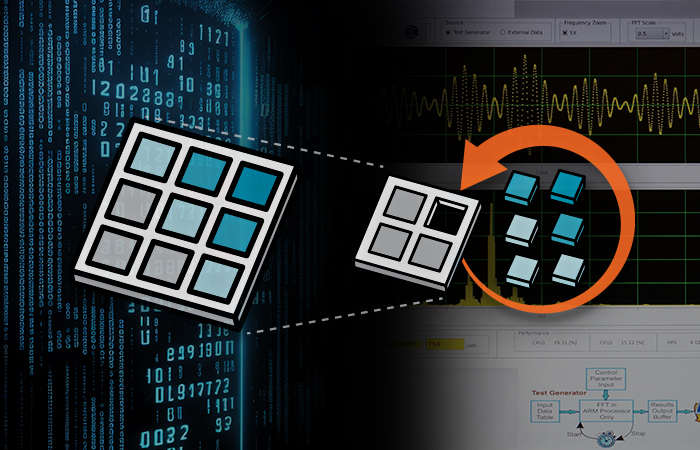

DFX Accelerators Demonstration Application

Dynamic Function eXchange (DFX) utilizes flexibility of Programmable Logic (PL) devices, allowing the runtime modification of an operating hardware design. A partitioned design allows one part of the PL to be reconfigured while another part of the system remains running. The DFX Accelerated App illustrates the premise behind DFX by leveraging the ability to time multiplex hardware dynamically on Kria™.

Features:

- Adds flexibility for algorithms available to Apps

- Accelerates configurable computing

- Reduces size of PL needed to implement functions

- Optimizes cost of hardware implementation

- Improves total power consumption

- Enhance PL fault tolerance

- Complete application including HW design

Hardware Needed

Additional Tools and Resources

Frequently Asked Questions

No, evaluating the app and advantages of underlying DFX accelerators does not require any experience in FPGA design.

This pre-built app is free of charge from AMD and DFX is available as a feature within the Vivado™ Design Suite. Starting with the 2019.1 tools release, no specific license code is necessary to use this feature for any edition of Vivado.

Four pieces of intellectual property are available specifically for use within DFX designs. There is no charge for any of these IP, and DFX designs do not require them. They are available to assist designers in quickly and easily implementing key aspects of a reconfigurable design. The IP are all found under the Dynamic Function eXchange heading within the IP catalog. For more information, please refer to UG909.

The basic premise of DFX is that the device hardware resources can be time-multiplexed similar to the ability of a microprocessor to switch tasks. Because the device is switching tasks in hardware, it has the benefit of both flexibility of a software implementation and the performance of a hardware implementation. Several different scenarios are presented in UG909 to illustrate the power of this technology and this resource may be helpful in architecting your end application.

Featured Documents

Accelerate Your AI-Enabled Edge Solution with Adaptive Computing

Learn all about adaptive SOMs, including examples of why and how they can be deployed in next-generation edge applications, and how smart vision providers benefit from the performance, flexibility, and rapid development that can only be achieved by an adaptive SOM.

Adaptive Computing in Robotics

Demand for robotics is accelerating rapidly. Building a robot that is safe and secure and can operate alongside humans is difficult enough. But getting these technologies working together can be even more challenging. Complicating matters is the addition of machine learning and artificial intelligence which is making it more difficult to keep up with computational demands. Roboticists are turning toward adaptive computing platforms which offer lower latency and deterministic, multi-axis control with built-in safety and security on an integrated, adaptable platform that is expandable for the future. Read the eBook to learn more.